The goal of this article is to give a quick run down of the current features of the NSimpleOlap library, this is still in development with new features being added and with its API still undergoing refinement.

NSimpleOlap is an OLAP engine, more precisely an in-memory OLAP engine, and it’s intended for educational use and for application development. With simple to use API to allow for easy setup and querying, which I hope will make it easier to demonstrate the usefulness of OLAP engines in solving certain classes of problems.

This library will be provided as is and with no front-end, it is not directed towards use in finance, fancy dashboards or regular Business Intelligence use cases. In which over-hyping and exaggerated licensing fees, have in my opinion limited the scope of use of these systems and undermined their acceptance. Limiting their use to the BI silo that is often only for the eyes of business managers.

But it can be much more…

Starting With The Demo Application

I will start with the demo application, it makes for easier presentation and exploration of the main concepts required to query a data cube. This is a simple console application, a throwback in time for those that are more graphically minded, but it will do for the purposes of this article.

You can find the Demo application and the NSimpleOlap library following this link:

The seed data in this demo application is a very basic flat file CSV file, although the base dimensions are referenced as numeric ids that have a correspondent in a supporting CSV file.

category, gender, place, Date, expenses, items

1, 1, 2, 2021-01-15,1000.12, 30

2, 2, 2, 2021-03-05,200.50, 5

4, 2, 5, 2021-10-17,11500.00, 101

3, 2, 2, 2021-08-25,100.00, 20

2, 1, 6, 2021-02-27,10.10, 5

1, 2, 2, 2021-08-30,700.10, 36

5, 2, 5, 2021-12-15,100.40, 31

1, 1, 3, 2021-09-07,100.12, 12

3, 2, 3, 2021-06-01,10.12, 30

2, 2, 2, 2021-06-05,10000.12, 30

1, 2, 1, 2021-05-04,100.12, 1

4, 2, 2, 2021-01-03,10.12, 6

2, 2, 3, 2021-11-09,100.12, 44

1, 2, 3, 2021-07-01,10.12, 8

4, 1, 1, 2021-04-24,100.12, 5

1, 1, 6, 2021-06-02,10.12, 7

4, 3, 6, 2021-05-18,100.12, 30

2, 1, 2, 2021-08-21,60.99, 8

1, 2, 2, 2021-02-16,6000.00, 89

4, 3, 6, 2021-03-07,600.00, 75

1, 1, 6, 2021-01-01,10.00, 12

4, 2, 2, 2021-07-28,2000.00, 30

5, 2, 6, 2021-12-20,50.10, 11

3, 1, 3, 2021-06-08,130.50, 2

Executing the demo application will show the following initial console messages.

You can type help to get a basic example of how you can make simple queries, and get the available dimensions and measures.

You can type a simple query, and get the outcome once you hit enter.

As you can see the results aren’t chronologically ordered, but in the order the cells were picked up by the query engine. This will be resolved once order selection is implemented.

Here’s another example.

And another example, but now focusing in the records that there is no data for gender.

As you can see some of the outputs have many empty spaces, because the test data isn’t very big. So in terms of the space of all available aggregations the current data cube is very sparse. But you can still view the data through different perspectives and have an idea of what is possible.

Starting Your Own Cube

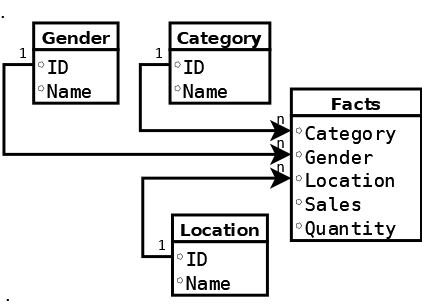

At this stage of development you can define dimensions, measures and metrics. Being that you can define regular dimensions that define lists of attributes or entities (colour, gender, city, country, etc.), or define Date dimensions that need to be handled differently. Since these follow defined calendar patterns and need to be generated from the incoming data in the facts tables.

Measures are variables that were observed from the entities that are defined in the facts table, these can be quantities of goods sold or bought, value or price of goods, total value of invoice, temperature, rainfall, etc.. These will be aggregated inside the cube in various combinations, although this will entail a certain loss of context. Since the aggregated cell that resulted from multiple data points won’t tell much about the pattern of the input data. But a cube is about exploring the forest and not about the individual trees.

Metrics are expressions that are calculated at aggregation time, and these allow to make some extra calculations as well as to keep some extra data context in the cell. These calculated values can be averages, minimum and maximum values, or any expression made with a composition of the implemented operations.

Setting Up Regular Dimensions

When adding new dimensions you will need to initially setup your facts data source. In this particular example we will need to specify a CSV file and add the fields from the file that we want as sources for your Cube. Also, you will need to specify the data source that has the dimension member. Which will have the column that will be used as an id and the column that will be used as the dimension member name.

CubeBuilder builder = new CubeBuilder();

builder.AddDataSource(dsbuild =>

{

dsbuild.SetName("sales")

.SetSourceType(DataSourceType.CSV)

.SetCSVConfig(csvbuild =>

{

csvbuild.SetFilePath("TestData//facts.csv")

.SetHasHeader();

})

.AddField("category", 0, typeof(int));

})

.AddDataSource(dsbuild =>

{

dsbuild.SetName("categories")

.SetSourceType(DataSourceType.CSV)

.AddField("id", 0, typeof(int))

.AddField("description", 1, typeof(string))

.SetCSVConfig(csvbuild =>

{

csvbuild.SetFilePath("TestData//dimension1.csv")

.SetHasHeader();

});

});

Then you will need to map the columns in your fact data source with your cube dimensions.

builder.SetSourceMappings((sourcebuild) =>

{

sourcebuild.SetSource("sales")

.AddMapping("category", "category");

})

And then add the metadata mappings from dimension members data sources.

builder.MetaData(mbuild =>

{

mbuild.AddDimension("category", (dimbuild) =>

{

dimbuild.Source("categories")

.ValueField("id")

.DescField("description");

});

});

Setting Up Measures

Getting a measure into a cube requires only two steps, first map the measure column from the facts data source.

builder.AddDataSource(dsbuild =>

{

dsbuild.SetName("sales")

.SetSourceType(DataSourceType.CSV)

.SetCSVConfig(csvbuild =>

{

csvbuild.SetFilePath("TestData//tableWithDate.csv")

.SetHasHeader();

})

.AddField("category", 0, typeof(int))

.AddField("expenses", 4, typeof(double));

})

And then add the measure metadata mapping for the cube.

builder.MetaData(mbuild =>

{

mbuild.AddDimension("category", (dimbuild) =>

{

dimbuild.Source("categories")

.ValueField("id")

.DescField("description");

})

.AddMeasure("spent", mesbuild =>

{

mesbuild.ValueField("expenses")

.SetType(typeof(double));

});

});

Setting Up Date Dimensions

Adding a Date dimension will add an extra layer of complexity, since you will need to specify what kind of Date levels you want the data to be sliced into.

You will start with mapping the Date field and in this case specify the date time format that it was set on the CSV file.

builder.AddDataSource(dsbuild =>

{

dsbuild.SetName("sales")

.SetSourceType(DataSourceType.CSV)

.SetCSVConfig(csvbuild =>

{

csvbuild.SetFilePath("TestData//tableWithDate.csv")

.SetHasHeader();

})

.AddField("category", 0, typeof(int))

.AddDateField("date", 3, "yyyy-MM-dd")

.AddField("expenses", 4, typeof(double));

});

Add the mapping to the data source and indicate what label fields you want.

builder.SetSourceMappings((sourcebuild) =>

{

sourcebuild.SetSource("sales")

.AddMapping("category", "category")

.AddMapping("date", "Year", "Month", "Day");

})

When defining the dimension metadata specify the dimension labels and the type of information the data will be transformed. In this this case you will have three dimensions: Year, Month and Day.

builder.MetaData(mbuild =>

{

mbuild.AddDimension("category", (dimbuild) =>

{

dimbuild.Source("categories")

.ValueField("id")

.DescField("description");

})

.AddDimension("date", dimbuild => {

dimbuild

.SetToDateSource(DateTimeLevels.YEAR, DateTimeLevels.MONTH, DateTimeLevels.DAY)

.SetLevelDimensions("Year", "Month", "Day");

})

.AddMeasure("spent", mesbuild =>

{

mesbuild.ValueField("expenses")

.SetType(typeof(double));

});

});

Setting Up Metrics

At the moment metrics can only be set after the Cube is initialized and not at configuration time, since that will require parsing text expressions. But you can still add metrics using the expression building API.

Setting up a metric will require you to identify what measures you want to use, and what maths operations are necessary to build it. As a simple example…

var cube = builder.Create<int>();

cube.Initialize();

cube.BuildMetrics()

.Add("Add10ToQuantity", exb => exb.Expression(e => e.Set("quantity").Sum(10)))

.Create();

This won’t do much to further the understanding of the data but it’s a start.

For more useful expressions you can also combine two measures and get rates and ratios.

cube.BuildMetrics()

.Add("RatioSpentToQuantity", exb =>

exb.Expression(e => e.Set("spent").Divide(ex => ex.Set("quantity").Value())))

.Create();

Or use some useful functions and retain some context from the source data.

cube.BuildMetrics()

.Add("AverageOnQuantity",

exb => exb.Expression(e => e.Set("quantity").Average()))

.Add("MaxOnQuantity",

exb => exb.Expression(e => e.Set("quantity").Max()))

.Add("MinOnQuantity",

exb => exb.Expression(e => e.Set("quantity").Min()))

.Create();

Getting More With Queries

A data cube is nothing if it cannot be queried, the NSimpleOlap fluent query API borrows many concepts from the MDX query language. You will need to get familiarized to specify your rows and columns as tuples. In general that is no different as setting paths or using something like xpath in XSL or any DOM XML API. You are not only slicing the cube but you are also defining what data hierarchies you want to visualize.

Defining a simple query and sending the output to the text console.

cube.Process();

var queryBuilder = cube.BuildQuery()

.OnRows("category.All.place.Paris")

.OnColumns("sex.All")

.AddMeasuresOrMetrics("quantity");

var query = queryBuilder.Create();

query.StreamRows().RenderInConsole();

| | sex male | sex female

category toys,place Paris | 12 | 8

category furniture,place Paris | 2 | 30

category clothes,place Paris | | 44

You can also select both measures and metrics at the same time in a query.

var queryBuilder = cube.BuildQuery()

.OnColumns("sex.All")

.AddMeasuresOrMetrics("quantity", "MaxOnQuantity", "MinOnQuantity");

var query = queryBuilder.Create();

var result = query.StreamRows().ToList();

Making filters on the aggregate values and the facts is also possible. First we will filter on the aggregates.

var queryBuilder = cube.BuildQuery()

.OnRows("category.All.place.All")

.OnColumns("sex.All")

.AddMeasuresOrMetrics("quantity")

.Where(b => b.Define(x => x.Dimension("sex").NotEquals("male")));

var query = queryBuilder.Create();

var result = query.StreamRows().ToList();

Then we will reduce the scope of the data by filtering on a measure.

var queryBuilder = cube.BuildQuery()

.OnRows("category.All.place.All")

.OnColumns("sex.All")

.AddMeasuresOrMetrics("quantity")

.Where(b => b.Define(x => x.Measure("quantity").IsEquals(5)));

var query = queryBuilder.Create();

var result = query.StreamRows().ToList();

Making filters on the facts will generate a cube with a smaller subset of data. This makes sense since the main Cube doesn’t have the full context of the facts, and any operation that requires digging on the source facts will require generating a new Cube to represent those aggregations.

In Conclusion…

The NSimpleOlap core is getting more stable and it’s already possible to query on complex hierarchies of dimensions. But there is still much to do, getting Time dimensions, adding dimension levels through metadata, transformers to convert measure data into interval dimensions to be able to query age ranges, etc.. Also, some more work is required to have a structure to enable better rendering of row and column headers in a hierarchical structure. Much to do, and so little time…